Gaussian Process: Implementation in Python

Contents

Gaussian Process: Implementation in Python#

In this section Gaussian Processes regression, as described in the previous section, is implemented in Python. First the case of predefined mean- and covariance-function is implemented. In the second part these functions are learned from data.

import numpy as np

from scipy import r_

from matplotlib import pyplot as plt

np.set_printoptions(precision=5,suppress=True)

Gaussian Process for Regression#

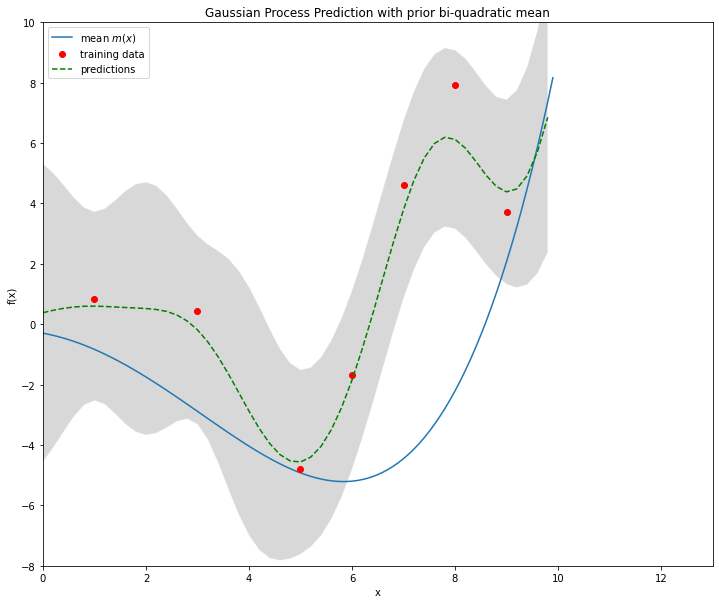

Definition of training data. This is the same data as used in the GP regression example in the previous section.

xB=np.array([1,2,3,4])

yB=np.array([0.25,0.95,2.3,3.9])

plt.figure(figsize=(12, 10))

plt.plot(xB,yB,"or",label="Training Data")

plt.legend()

plt.show()

Define the positions at wich the function values shall be predicted. In contrast to the GP regression in the previous section, below we predict numeric values not only at the three locations \(5,6\) and \(7\), but at all locations in the range from 0 to 7 with a resolution of \(0.2\):

xPred=np.arange(0,7,0.2)

Define the hyperparameters for the mean- and covariance function

c2=0.25 # constant coefficient of quadratic term in prior mean function

ell=1 # horizontal length scale parameter in the squared exponential function

sigmaF2=2 #sigmaF2 is the variance of the multivariate gaussian distribution

sigmaN2=0.005 #sigmaN2 is the variance of the regression noise-term

Definition of mean- and covariance function. Here, the mean function is a quadratic polynomial and the covariance function is the squared exponential.

def priormean(xin):

return c2*xin**2

def corrFunc(xa,xb):

return sigmaF2*np.exp(-((xa-xb)**2)/(2.0*ell**2))

Calculate the values mx of the mean function in the range from 0 to 7. These values are just used for plotting. The values mxB are the mean-function values at the training data x-values, i.e. the mean-vector. These values are applied for calculating the prediction.

mx=priormean(xPred)

mxB=priormean(xB)

Calculate the covariance matrix by evaluating the covariance function at the training data x-values.

KB=np.zeros((len(xB),len(xB)))

for i in range(len(xB)):

for j in range(i,len(xB)):

noise=(sigmaN2 if i==j else 0)

k=corrFunc(xB[i],xB[j])+noise

KB[i][j]=k

KB[j][i]=k

print('-'*10+' Matrix KB '+'-'*10)

print(KB.round(decimals=3))

---------- Matrix KB ----------

[[2.005 1.213 0.271 0.022]

[1.213 2.005 1.213 0.271]

[0.271 1.213 2.005 1.213]

[0.022 0.271 1.213 2.005]]

Calculate the inverse of the covariance matrix

KBInv=np.linalg.inv(KB)

print('-'*10+' Inverse of Matrix KB '+'-'*10)

print(KBInv.round(decimals=3))

---------- Inverse of Matrix KB ----------

[[ 0.953 -0.862 0.519 -0.208]

[-0.862 1.688 -1.219 0.519]

[ 0.519 -1.219 1.688 -0.862]

[-0.208 0.519 -0.862 0.953]]

Calculate the covariance matrix \(K_*\) between training x-values and prediction x-values

Ks=np.zeros((len(xPred),len(xB)))

for i in range(len(xPred)):

for j in range(len(xB)):

k=corrFunc(xPred[i],xB[j])

Ks[i][j]=k

print('-'*10+' Matrix Ks '+'-'*10)

print(Ks.round(decimals=5))

---------- Matrix Ks ----------

[[1.21306 0.27067 0.02222 0.00067]

[1.4523 0.3958 0.03968 0.00146]

[1.67054 0.55607 0.06809 0.00307]

[1.84623 0.75062 0.11227 0.00618]

[1.9604 0.9735 0.17784 0.01195]

[2. 1.21306 0.27067 0.02222]

[1.9604 1.4523 0.3958 0.03968]

[1.84623 1.67054 0.55607 0.06809]

[1.67054 1.84623 0.75062 0.11227]

[1.4523 1.9604 0.9735 0.17784]

[1.21306 2. 1.21306 0.27067]

[0.9735 1.9604 1.4523 0.3958 ]

[0.75062 1.84623 1.67054 0.55607]

[0.55607 1.67054 1.84623 0.75062]

[0.3958 1.4523 1.9604 0.9735 ]

[0.27067 1.21306 2. 1.21306]

[0.17784 0.9735 1.9604 1.4523 ]

[0.11227 0.75062 1.84623 1.67054]

[0.06809 0.55607 1.67054 1.84623]

[0.03968 0.3958 1.4523 1.9604 ]

[0.02222 0.27067 1.21306 2. ]

[0.01195 0.17784 0.9735 1.9604 ]

[0.00618 0.11227 0.75062 1.84623]

[0.00307 0.06809 0.55607 1.67054]

[0.00146 0.03968 0.3958 1.4523 ]

[0.00067 0.02222 0.27067 1.21306]

[0.0003 0.01195 0.17784 0.9735 ]

[0.00013 0.00618 0.11227 0.75062]

[0.00005 0.00307 0.06809 0.55607]

[0.00002 0.00146 0.03968 0.3958 ]

[0.00001 0.00067 0.02222 0.27067]

[0. 0.0003 0.01195 0.17784]

[0. 0.00013 0.00618 0.11227]

[0. 0.00005 0.00307 0.06809]

[0. 0.00002 0.00146 0.03968]]

Calculate the covariance matrix \(K_{**}\) between prediction x-values

Kss=np.zeros((len(xPred),len(xPred)))

for i in range(len(xPred)):

for j in range(i,len(xPred)):

noise=(sigmaN2 if i==j else 0)

k=corrFunc(xPred[i],xPred[j])+noise

Kss[i][j]=k

Kss[j][i]=k

print('-'*10+' Matrix Kss '+'-'*10)

print(Kss.round(decimals=3))

---------- Matrix Kss ----------

[[2.005 1.96 1.846 ... 0. 0. 0. ]

[1.96 2.005 1.96 ... 0. 0. 0. ]

[1.846 1.96 2.005 ... 0. 0. 0. ]

...

[0. 0. 0. ... 2.005 1.96 1.846]

[0. 0. 0. ... 1.96 2.005 1.96 ]

[0. 0. 0. ... 1.846 1.96 2.005]]

Calculate the prediction

mus=priormean(xPred)

ypred=mus+np.dot(np.dot(Ks,KBInv),(yB-mxB))

print("Prediction: ",ypred)

Prediction: [ 0.0607 0.07144 0.09576 0.1329 0.18343 0.24955 0.33491 0.44404

0.58139 0.75009 0.95099 1.1821 1.43878 1.71466 2.0031 2.29884

2.59926 2.90502 3.21986 3.54962 3.90082 4.27924 4.68881 5.13106

5.60528 6.10899 6.63886 7.19143 7.76378 8.35385 8.96053 9.58348

10.22295 10.87951 11.55381]

Calculate the covariance of the predictions

yvar=np.diag(Kss-np.dot(Ks,np.dot(KBInv,np.transpose(Ks))))

stds=np.sqrt(yvar)

print("Double Standard Deviation: ",2*stds)

Double Standard Deviation: [2.03145 1.67176 1.25471 0.81683 0.41676 0.19976 0.30632 0.39288 0.36953

0.2698 0.19958 0.25702 0.32803 0.32803 0.25702 0.19958 0.2698 0.36953

0.39288 0.30632 0.19976 0.41676 0.81683 1.25471 1.67176 2.03145 2.31537

2.52119 2.65828 2.74206 2.78897 2.813 2.82426 2.82908 2.83097]

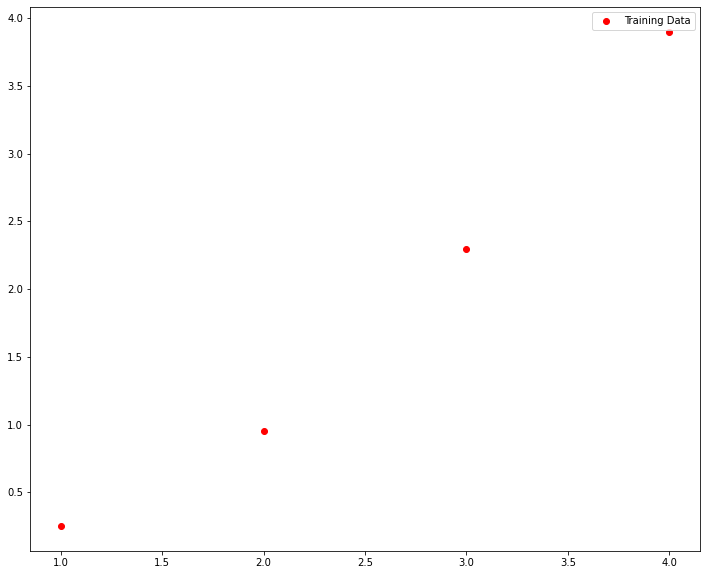

Plot training data and predicitons:

plt.figure(figsize=(12, 10))

plt.plot(xPred,mx,label="mean $m(x)$")

#plt.hold(True)

plt.plot(xB,yB,'or',label="training data")

plt.plot(xPred,ypred,'--g',label="predictions")

plt.text(0.5,8,"$m(x)=0.25 \cdot x^2$ \n$k(x,x')=2 \cdot \exp(-0.5\cdot(x-x')^2)$ \n $\sigma_n^2=0.005$",fontsize=14)

plt.legend(loc=2,numpoints=1)

plt.title('Gaussian Process Prediction with prior quadratic mean')

plt.xlabel('x')

plt.ylabel('f(x)')

plt.axis([0,8,0,16])

# plot 2*standard deviation 95%-confidence interval

fillx = r_[xPred, xPred[::-1]]

filly = r_[ypred+2*stds, ypred[::-1]-2*stds[::-1]]

plt.fill(fillx, filly, facecolor='gray', edgecolor='white', alpha=0.3)

plt.show()

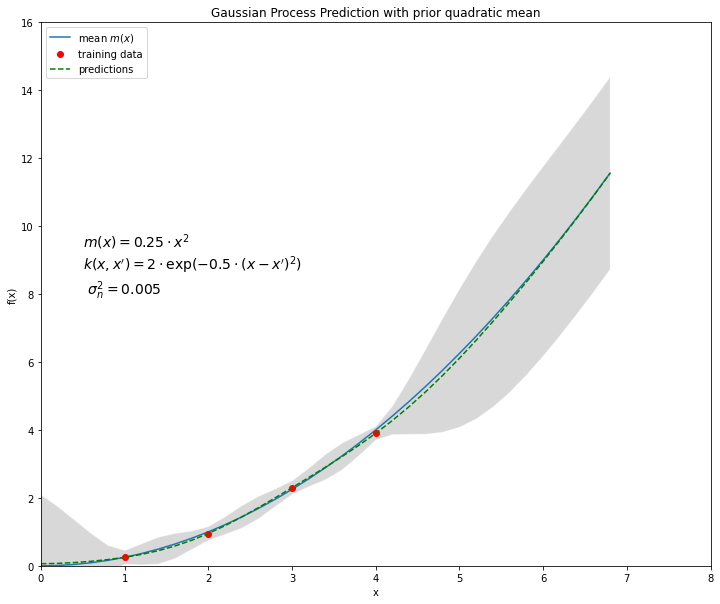

Find optimum hyperparameters for mean- and covariance-function#

import numpy as np

from scipy import r_

from matplotlib import pyplot as plt

import scipy.optimize as opt

xB=np.array([1., 3., 5., 6., 7., 8., 9.])

yB=xB*np.sin(xB)

def objective(x): #Returns Log-Likelihood, which must be optimized

mxB=x[0]*xB**2+x[1]*xB+x[2]+x[6]*xB**3+x[7]*xB**4

KB=np.zeros((len(xB),len(xB)))

for i in range(len(xB)):

for j in range(i,len(xB)):

noise=x[5]**2

k=x[3]**2*np.exp(-((xB[i]-xB[j])**2)/(2.0*x[4]**2))+noise**2

KB[i][j]=k

KB[j][i]=k

KBinv=np.linalg.inv(KB)

return -1*(-0.5* np.log(np.linalg.det(KB))-0.5 * np.dot(np.transpose(yB-mxB), \

np.dot(KBinv,(yB-mxB)))-2*np.log(2*np.pi))

#Define constraints on the hyperparameters

def constr1(x):

return x[4]-1 #horizontal length-scale > 1

def constr2(x):

return 5-x[4] #horizontal length-scale < 5

def constr3(x):

return x[3]-0.8 #vertical length-scale >0.8

x0=(0.1, 0.01, 0.01, 2.0, 1.0, 0.01,0.01,0.01) #Startvalues for optimization

xopt=opt.fmin_cobyla(objective,x0,cons=[constr1,constr2,constr3])

print('-'*10+"Results of optimisation"+'-'*10)

print(xopt)

----------Results of optimisation----------

[-0.23166 -0.31688 -0.29713 2.66702 1. 1.12786 0.00837 0.00272]

#####################Definition of hyperparameters#############################

c4=xopt[7] # constant coefficient of biquadratic term in prior mean function

c3=xopt[6] # constant coefficient of cubic term in prior mean function

c2=xopt[0] # constant coefficient of quadratic term in prior mean function

c1=xopt[1] # constant coefficient of linear term in prior mean function

c0=xopt[2] # constant coefficient constant term in prior mean function

ell=xopt[4] # horicontal length scale parameter in the squared exponential function

sigmaF2=xopt[3] #sigmaF2 is the standard deviation of the multivariate gaussian distribution

sigmaN2=xopt[5] #sigmaN2 is the standard deviation of the regression noise-term

print("Learned mean function m(x) = %1.3f*x^4 + %1.3f*x^3 + %1.3f*x^2+ %1.3f*x + %1.3f"%(c4,c3,c2,c1,c0))

print("Learned cov. function m(x) = (%1.3f)^2 *exp(-(x-x')^2 / (2 * (%1.3f)^2))+ %1.3f^2"%(sigmaF2,ell,sigmaN2))

Learned mean function m(x) = 0.003*x^4 + 0.008*x^3 + -0.232*x^2+ -0.317*x + -0.297

Learned cov. function m(x) = (2.667)^2 *exp(-(x-x')^2 / (2 * (1.000)^2))+ 1.128^2

###################Definition of mean- and covariance function#################

def priormean(xin):

return c4*xin**4+c3*xin**3+c2*xin**2+c1*xin+c0

def corrFunc(xa,xb):

return sigmaF2**2*np.exp(-((xa-xb)**2)/(2.0*ell**2))

x=np.arange(0,10,0.1)

mx=priormean(x)

mxB=priormean(xB)

xPred=np.arange(0,10,0.2)

KB=np.zeros((len(xB),len(xB)))

for i in range(len(xB)):

for j in range(i,len(xB)):

noise=(sigmaN2**2 if i==j else 0)

k=corrFunc(xB[i],xB[j])+noise**2

KB[i][j]=k

KB[j][i]=k

print('-'*10+' Matrix KB '+'-'*10)

print(KB.round(decimals=3))

---------- Matrix KB ----------

[[8.731 0.963 0.002 0. 0. 0. 0. ]

[0.963 8.731 0.963 0.079 0.002 0. 0. ]

[0.002 0.963 8.731 4.314 0.963 0.079 0.002]

[0. 0.079 4.314 8.731 4.314 0.963 0.079]

[0. 0.002 0.963 4.314 8.731 4.314 0.963]

[0. 0. 0.079 0.963 4.314 8.731 4.314]

[0. 0. 0.002 0.079 0.963 4.314 8.731]]

KBInv=np.linalg.inv(KB)

print('-'*10+' Inverse of Matrix KB '+'-'*10)

print(KBInv.round(decimals=3))

---------- Inverse of Matrix KB ----------

[[ 0.116 -0.013 0.002 -0.001 0. -0. 0. ]

[-0.013 0.118 -0.017 0.009 -0.003 0.001 -0. ]

[ 0.002 -0.017 0.159 -0.094 0.032 -0.008 0.001]

[-0.001 0.009 -0.094 0.212 -0.111 0.036 -0.008]

[ 0. -0.003 0.032 -0.111 0.218 -0.111 0.032]

[-0. 0.001 -0.008 0.036 -0.111 0.211 -0.092]

[ 0. -0. 0.001 -0.008 0.032 -0.092 0.157]]

Ks=np.zeros((len(xPred),len(xB)))

for i in range(len(xPred)):

for j in range(len(xB)):

k=corrFunc(xPred[i],xB[j])

Ks[i][j]=k

print('-'*10+' Matrix Ks '+'-'*10)

print(Ks.round(decimals=5))

---------- Matrix Ks ----------

[[4.31426 0.07902 0.00003 0. 0. 0. 0. ]

[5.1651 0.14113 0.00007 0. 0. 0. 0. ]

[5.94128 0.24218 0.00018 0. 0. 0. 0. ]

[6.56613 0.39929 0.00044 0. 0. 0. 0. ]

[6.97216 0.6325 0.00105 0.00001 0. 0. 0. ]

[7.11301 0.96264 0.00239 0.00003 0. 0. 0. ]

[6.97216 1.40765 0.00521 0.00007 0. 0. 0. ]

[6.56613 1.97768 0.01091 0.00018 0. 0. 0. ]

[5.94128 2.66959 0.02197 0.00044 0. 0. 0. ]

[5.1651 3.46227 0.04251 0.00105 0.00001 0. 0. ]

[4.31426 4.31426 0.07902 0.00239 0.00003 0. 0. ]

[3.46227 5.1651 0.14113 0.00521 0.00007 0. 0. ]

[2.66959 5.94128 0.24218 0.01091 0.00018 0. 0. ]

[1.97768 6.56613 0.39929 0.02197 0.00044 0. 0. ]

[1.40765 6.97216 0.6325 0.04251 0.00105 0.00001 0. ]

[0.96264 7.11301 0.96264 0.07902 0.00239 0.00003 0. ]

[0.6325 6.97216 1.40765 0.14113 0.00521 0.00007 0. ]

[0.39929 6.56613 1.97768 0.24218 0.01091 0.00018 0. ]

[0.24218 5.94128 2.66959 0.39929 0.02197 0.00044 0. ]

[0.14113 5.1651 3.46227 0.6325 0.04251 0.00105 0.00001]

[0.07902 4.31426 4.31426 0.96264 0.07902 0.00239 0.00003]

[0.04251 3.46227 5.1651 1.40765 0.14113 0.00521 0.00007]

[0.02197 2.66959 5.94128 1.97768 0.24218 0.01091 0.00018]

[0.01091 1.97768 6.56613 2.66959 0.39929 0.02197 0.00044]

[0.00521 1.40765 6.97216 3.46227 0.6325 0.04251 0.00105]

[0.00239 0.96264 7.11301 4.31426 0.96264 0.07902 0.00239]

[0.00105 0.6325 6.97216 5.1651 1.40765 0.14113 0.00521]

[0.00044 0.39929 6.56613 5.94128 1.97768 0.24218 0.01091]

[0.00018 0.24218 5.94128 6.56613 2.66959 0.39929 0.02197]

[0.00007 0.14113 5.1651 6.97216 3.46227 0.6325 0.04251]

[0.00003 0.07902 4.31426 7.11301 4.31426 0.96264 0.07902]

[0.00001 0.04251 3.46227 6.97216 5.1651 1.40765 0.14113]

[0. 0.02197 2.66959 6.56613 5.94128 1.97768 0.24218]

[0. 0.01091 1.97768 5.94128 6.56613 2.66959 0.39929]

[0. 0.00521 1.40765 5.1651 6.97216 3.46227 0.6325 ]

[0. 0.00239 0.96264 4.31426 7.11301 4.31426 0.96264]

[0. 0.00105 0.6325 3.46227 6.97216 5.1651 1.40765]

[0. 0.00044 0.39929 2.66959 6.56613 5.94128 1.97768]

[0. 0.00018 0.24218 1.97768 5.94128 6.56613 2.66959]

[0. 0.00007 0.14113 1.40765 5.1651 6.97216 3.46227]

[0. 0.00003 0.07902 0.96264 4.31426 7.11301 4.31426]

[0. 0.00001 0.04251 0.6325 3.46227 6.97216 5.1651 ]

[0. 0. 0.02197 0.39929 2.66959 6.56613 5.94128]

[0. 0. 0.01091 0.24218 1.97768 5.94128 6.56613]

[0. 0. 0.00521 0.14113 1.40765 5.1651 6.97216]

[0. 0. 0.00239 0.07902 0.96264 4.31426 7.11301]

[0. 0. 0.00105 0.04251 0.6325 3.46227 6.97216]

[0. 0. 0.00044 0.02197 0.39929 2.66959 6.56613]

[0. 0. 0.00018 0.01091 0.24218 1.97768 5.94128]

[0. 0. 0.00007 0.00521 0.14113 1.40765 5.1651 ]]

Kss=np.zeros((len(xPred),len(xPred)))

for i in range(len(xPred)):

for j in range(i,len(xPred)):

noise=(sigmaN2 if i==j else 0)

k=corrFunc(xPred[i],xPred[j])+noise

Kss[i][j]=k

Kss[j][i]=k

print('-'*10+' Matrix Kss '+'-'*10)

print(Kss.round(decimals=3))

---------- Matrix Kss ----------

[[8.241 6.972 6.566 ... 0. 0. 0. ]

[6.972 8.241 6.972 ... 0. 0. 0. ]

[6.566 6.972 8.241 ... 0. 0. 0. ]

...

[0. 0. 0. ... 8.241 6.972 6.566]

[0. 0. 0. ... 6.972 8.241 6.972]

[0. 0. 0. ... 6.566 6.972 8.241]]

mus=priormean(xPred)

ypred=mus+np.dot(np.dot(Ks,KBInv),(yB-mxB))

print("Prediction: ",ypred)

Prediction: [ 0.38157 0.46039 0.5246 0.56966 0.59363 0.59809 0.5881 0.57076

0.55258 0.53637 0.5184 0.48704 0.42336 0.30413 0.10641 -0.18711

-0.58346 -1.07636 -1.64573 -2.25943 -2.87689 -3.45366 -3.94595 -4.31448

-4.52718 -4.56071 -4.40093 -4.04266 -3.48915 -2.75167 -1.8496 -0.81108

0.326 1.51312 2.69135 3.79373 4.75039 5.49668 5.98351 6.18783

6.12083 5.83129 5.40228 4.94115 4.56462 4.38199 4.48043 4.9154

5.70787 6.84816]

yvar=np.diag(Kss-np.dot(Ks,np.dot(KBInv,np.transpose(Ks))))

stds=np.sqrt(yvar)

print("Double Standard Deviation: ",2*stds)

Double Standard Deviation: [4.93583 4.54478 4.08802 3.62796 3.26874 3.12563 3.24058 3.53169 3.85821

4.10058 4.18716 4.09535 3.85021 3.52547 3.23962 3.12263 3.23387 3.50846

3.8152 4.03888 4.11295 4.02237 3.79841 3.50874 3.23767 3.05236 2.96927

2.95456 2.96164 2.96433 2.96054 2.95694 2.95585 2.9546 2.95204 2.95059

2.95208 2.95462 2.95585 2.95722 2.96163 2.96663 2.9646 2.95651 2.96932

3.05652 3.26759 3.60697 4.02773 4.46227]

plt.figure(figsize=(12, 10))

plt.plot(x,mx,label="mean $m(x)$")

#plt.hold(True)

plt.plot(xB,yB,'or',label="training data")

plt.plot(xPred,ypred,'--g',label="predictions")

plt.legend(loc=2,numpoints=1)

plt.title('Gaussian Process Prediction with prior bi-quadratic mean')

plt.xlabel('x')

plt.ylabel('f(x)')

plt.axis([0,13,-8,10])

# plot 2*standard deviation 95%-confidence interval

fillx = r_[xPred, xPred[::-1]]

filly = r_[ypred+2*stds, ypred[::-1]-2*stds[::-1]]

plt.fill(fillx, filly, facecolor='gray', edgecolor='white', alpha=0.3)

plt.show()